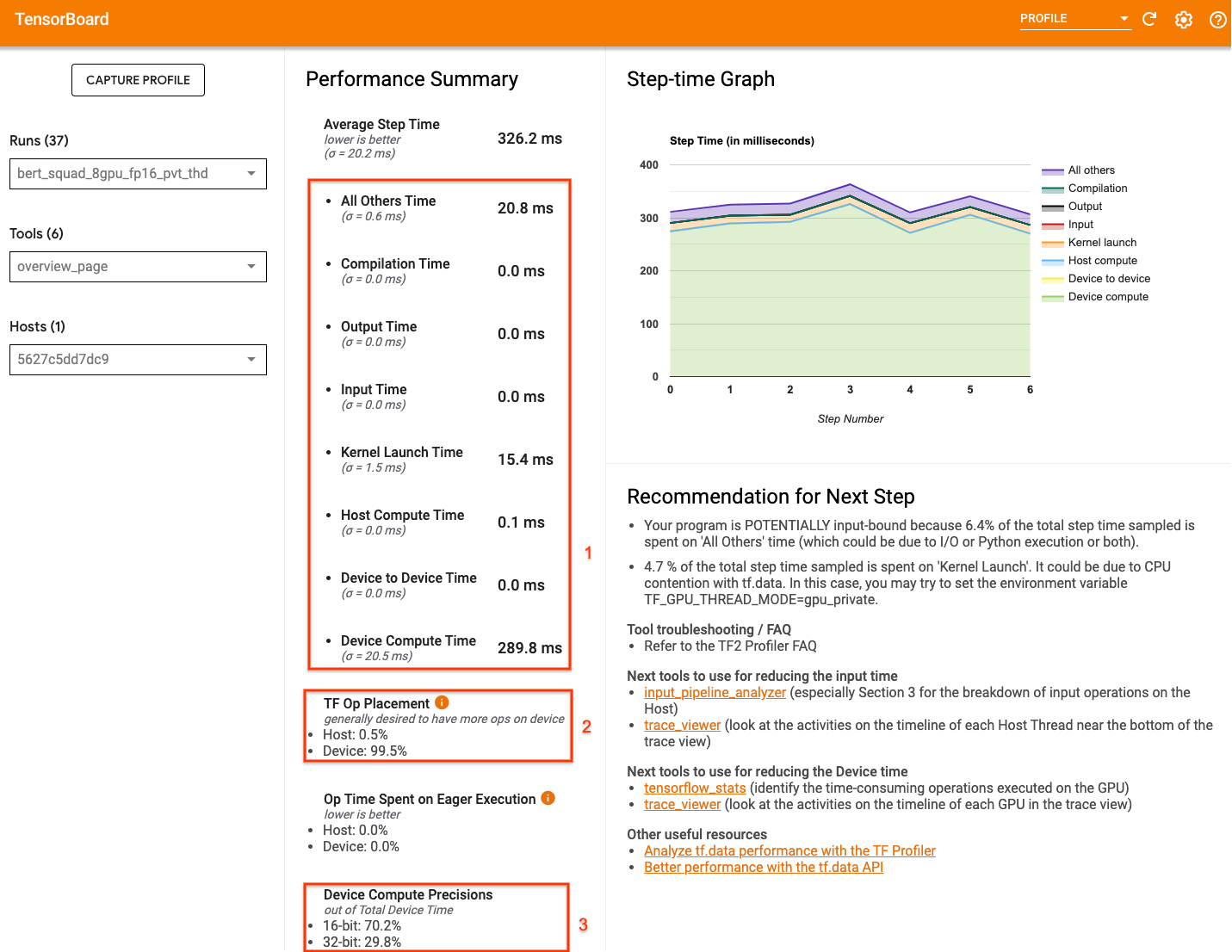

A quick guide to distributed training with TensorFlow and Horovod on Amazon SageMaker | by Shashank Prasanna | Towards Data Science

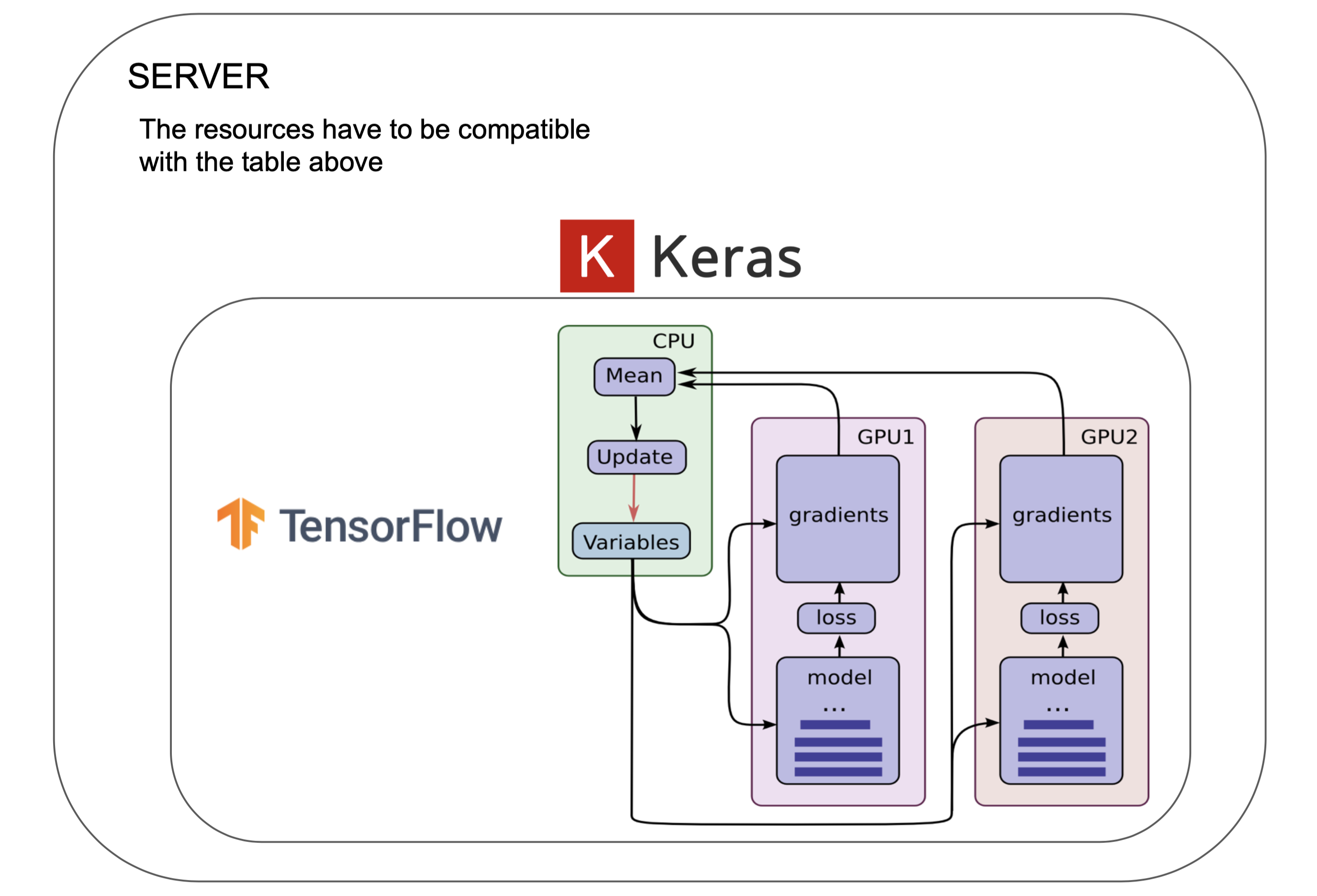

Using the Python Keras multi_gpu_model with LSTM / GRU to predict Timeseries data - Data Science Stack Exchange

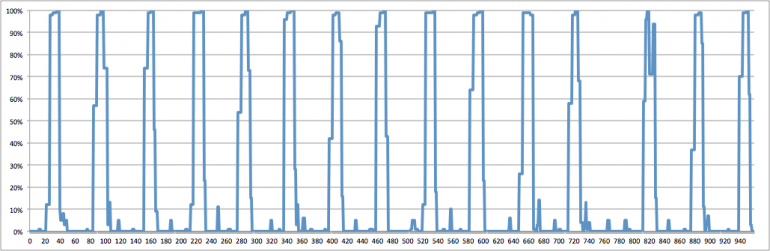

python - Why only one of the GPU pair has a nonzero GPU utilization under a Tensorflow / keras job? - Stack Overflow